The PowerHome URL Scraper plugins (URLScraper and URLScraper1) are designed to parse data from designated web pages, in a manner similar to the ph_regex() family of string search functions, except that the scraper plugins run in the background in their own independant thread, and take no PowerHome resources.

The preferred scraper is "URLScraper" which is the most general scraper implementation which uses the Catalyst HTTP Control functions. In rare instances where a unique website is incompatible with the Catalyst HTTP Control for some reason, the alternative URLScraper1 that uses raw socket communications is available, and will generally be successful.

The regex search that is done uses the VBScript regular expression engine (the same that is used in the new ph_regex2 functions). Full details, and good tutorials, on the protocol can be found here ...

http://www.regular-expressions.info/quickstart.html

To use the scraper the Plugin must be ...

1) defined in PowerHome Explorer>Setup>Plugins,

2) the default initialization file "c:\powerhome\ plugins\urlscraper.ini" needs to be modified or replaced, and

3)

a trigger event created in the PowerHome Explorer Triggers table (to call a Macro, or other Action).

A definition for the URLScraper1 plugin must be entered in the PowerHome Explorer>Setup>Plugins table as follows (the 'Load Order' number doesn't have to be as exampled below. Any sequence number, is appropriate). See following discussion regarding the use of "URLScraper" or "URLScraper1".

The example image below shows both plugin versions defined for reference purposes, although normally only one would be used.

[URL_1]

url=http://m.wund.com/cgi-bin/findweather/getForecast?brand=mobile&query=32712

freq=0.5

scrapecount=2

[URL_1_1]

;Temperature {The ";" makes this a comment line}

regexsearch=<td>Temperature</td>[\s\S]*?<b>(.+)</b>

[URL_1_2]

;Humidity

regexsearch=<td>Humidity</td>[\s\S]*?<b>(.+)</b>

regexoccur=1

regexflags=0

These two searches will find the Temperature and the Humidity from the m.wund.com web site and return to the Trigger the Temperature in LOCAL1 and the Humidity in LOCAL2. Up to 9 parameters can be captured. If more are needed a second (ie, {URL_2] section could be defined to capture additional data.

NOTE: The [URL_x] sections are independant of each other and thus can be used to capture additional data from the same site, as indicated above, or may be directed at totally different sites with different frequencies and scrape counts for different purposes (ie, weather on one and football scores on another).

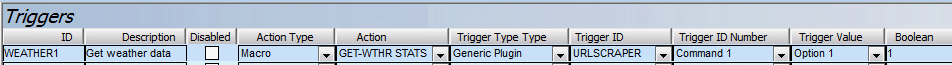

Create a new generic plugin trigger for each of the URL_x scrapes. You can give them ID’s of WEATHER1 and WEATHER2. Set the Trigger ID number to “Command 1" for both and the Trigger Value to “Option 1" for WEATHER1 and “Option 2" for WEATHER2.

When the WEATHER1 trigger fires, the Temperature will be in LOCAL1. When WEATHER2 fires the Humidity will be in LOCAL1.

If you wanted only a single trigger with temperature and humidity, you would need to have a single scrape (URL_1_1) with two snap sections. Then you could have temp in LOCAL1 (if it occurs first) and humidity in LOCAL2.

As referenced above in the URL_1_1 description, up to 5 search matches can be captured in a single trigger. If more are needed, then a 2nd URL_x (ie, URL_2) must be defined. It can pass five more data elements.

All Triggers for ULR_1 extractions are triggered with a "Trigger ID Number" of "Command 1", where the "Trigger Value" will be "Option 1" for the first (LOCAL1) snap, Option 2 for the 2nd (LOCAL2) snap, etc.

URL_2 extractions will be triggered from "Command 2" triggers with "Option 1" (LOCAL1) for the 1st snap in this 2nd URL Group, and "Option 2" (LOCAL2) for the next snap, etc.

The following examples demonstrates an extraordinarily way to extract weather data [Special thanks to "snoker" who discovered this!]

Weather Underground has a free "developer API" you can sign up for that will VERY quickly return a customized XML-formatted document with only the raw information needed (including full compliment of weather data combined with precipitation over time).

You have to sign up for a free API key (be sure to pick the free option which is the developer/500 call-per-day/10 call-per-minute option - any larger volumes they sensibly want you to pay for).

You simply register a free account with them. They send a confirmation e-mail for the account creation, and once you're in go through their "cart" (as mentioned previously at no charge to you if you pick the correct option) and check out.

You are then issued an unlimited call API key (keep this tight for obvious reasons).

The documentation (http://www.wunderground.com/weather/api/d/docs) is fairly straight up. You can call by LAT/LON, city location, or look up a specific station by that ID (tif you know of a maintained station nearby at a school, airport, etc.. Here is an example call to a local site using the Weather Underground demo API key that goes after a specific weather station:

ph_geturl1( "http://api.wunderground.com/api/6f4c56e1c72e7c82/geolookup/ conditions/q/pws:KCOHIGHL5.json",1,20)

...and here is an example of how to call out to a city location instead:

ph_geturl1( "http://api.wunderground.com/api/6f4c56e1c72e7c82/conditions /q/CA/San_Francisco.json",1,20)

NOTE: You have to put your own API key in where theirs (6f4c56e1c72e7c82) is used here (I'm sure they cycle them so probably disable these on a frequent basis - these may not work directly without inserting your own key). Also, if you want to extract the HTML to examine its actual contents, you can use one of the two example method above, but it's output will be so volumenous that your will have to use a ph_write file() function to get the full results. For example...

![]()

But you have to open the result in something like NotePad++ to see it...and it's AWESOME (excerpt below):

. . . .

. . . .

"weather":"Clear",

"temperature_string":"69.5 F (20.8 C)",

"temp_f":69.5,

"temp_c":20.8,

"relative_humidity":"57%",

"wind_string":"From the West at 1.2 MPH Gusting to 3.0 MPH",

"wind_dir":"West",

"wind_degrees":270,

"wind_mph":1.2,

"wind_gust_mph":"3.0",

"wind_kph":1.9,

"wind_gust_kph":"4.8",

"pressure_mb":"1015",

. . . .

. . . .

The following URLScraper ini structure will access the weather site via its API and scrape off the Temperature and wind speed data elements and pass them via the Trigger in LOCAL1 and LOCAL2 respectively. If the Trigger Action Type field is set to a Macro, that macro can then use the data passed in LOCAL1...LOCALx as apppropriate.

NOTE: all of the scrapes could also be captured in a single URL_1_1 scrape as illustrated in the "five (5) snaps off a page" example under the "Configuring the urlscraper.ini file" heading above.

NOTE: Setting the regexflags=2 would only fire the Trigger when the weather data actually changed from one reading to the next. If there are no changes, then there will be no attempt to fire the Trigger, thus minimizing extra CPU loading.

[URL_1]

url=http://api.wunderground.com/api/7d6ed69e542ccec1/conditions/q/MA/Boston.json

freq=15.0

scrapecount=1

[URL_1_1]

regexsearch="temp_f":(.+),

regexoccur=1

regexflags=0